Here’s what you’ll find in this fortnight’s AI updates:

- OpenAI introduces new features for ChatGPT

- OpenAI responds to the New York Times lawsuit

- OpenAI approaches the 2024 Elections with safety measures to circumvent deepfakes

- Australia considers regulating high risk AI

- FTC asks AI Model-as-a-Service companies to uphold privacy commitments

- Draft Bill introduced in the US Congress for AI risk management at federal level

- U.S. Representatives introduce AI Foundation Model Transparency Act for Enhanced Accountability

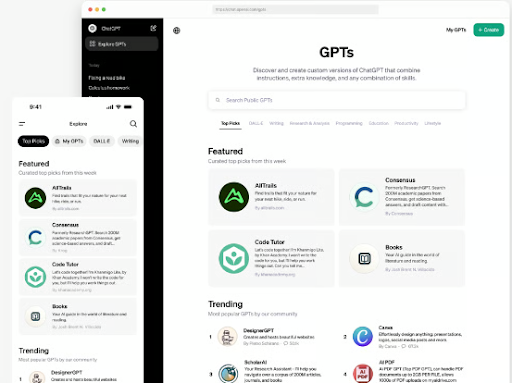

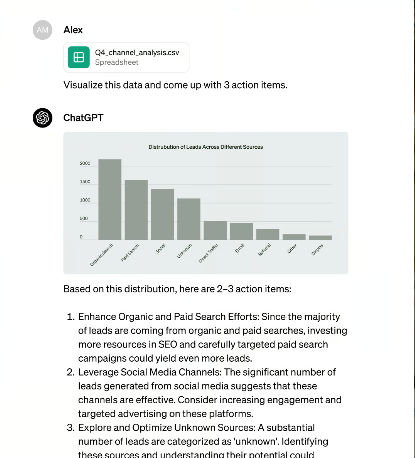

OPENAI INTRODUCES NEW FEATURES FOR CHATGPT

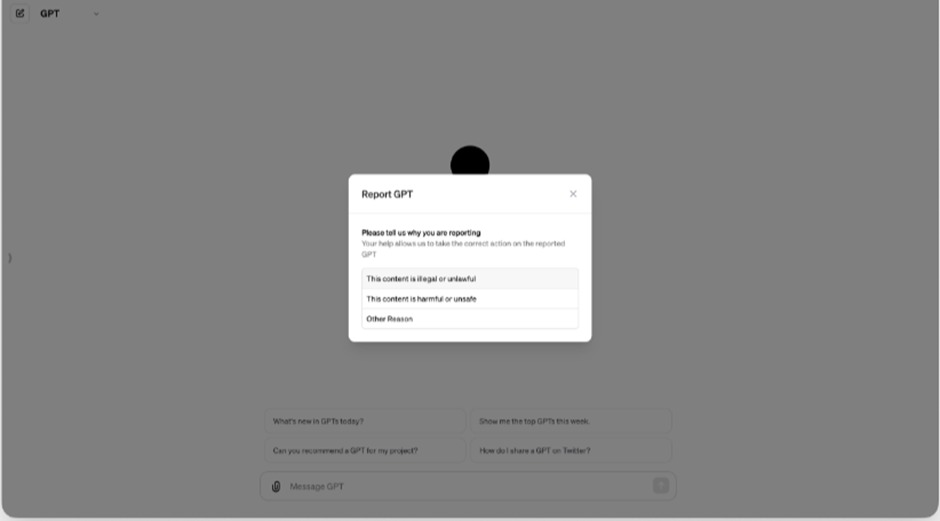

GPT Store

On January 10, 2024, ChatGPT introduced a new feature called GPT Store, accessible by ChatGPT Plus, Team, and Enterprise users. The store features a variety of GPTs developed by partners and the community, categorized into sections such as DALL·E, writing, research, programming, education, and lifestyle. Users can explore popular and trending GPTs on the community leaderboard and discover new featured GPTs weekly.

ChatGPT Team

On 10 January, 2024, a new plan was introduced by OpenAI for teams of all sizes, which allows the customers access to a GPT-4, DALLE-3, workspaces for team management, and custom GPTs.

OPENAI RESPONDS TO THE NEW YORK TIMES LAWSUIT

On January 8th, 2024 OpenAI in its blog responded to the New York Times lawsuit and clarified it’s intent.

They disagreed with claims of New York Times and asserted that training AI models using publicly available internet materials is fair use, and is accepted by precedents and a wide range of societies and organizations. They further rationalized that laws of countries like EU, Japan, Singapore, etc. permit training models on copyrighted content.

OpenAI also claims that New York Times have been withholding information. Both companies were allegedly in ongoing partnership negotiations, wherein OpenAI emphasized that the Times’ content had minimal impact on model training, following which OpenAI was surprised by a lawsuit.

Earlier, on December 27, 2023 New York Times sued OpenAI & Microsoft contending that articles published by the Times were used to train automated chatbots of OpenAI.

OPENAI APPROACHES 2024 ELECTIONS WITH SAFETY MEASURES TO CIRCUMVENT DEEPFAKES

On January 15th, 2024, OpenAI in its blog conveyed that for the elections in 2024 across various democracies all over the world, OpenAI is employing measures such as accuracy, enforced transparency policies, and dedicating a cross-functional team to election work.

They have been working to prevent deep fakes, chatbots impersonating candidates by employing ‘Usage Policies’ that ban the building of apps that constitute political campaigning, chatbots pretending to be people (whether influential or not), and apps that misrepresent election-related information. They are also working on methods to allow traceability of images generated by DALL E 3.

Earlier in November, 2023, Meta stated that they won’t be allowing political content to be generated using its generative AI services.

AUSTRALIA CONSIDERS REGULATING HIGH RISK AI

On January 14, 2024, the Australian government made an announcement about its decision to organise an advisory body to work with government, industry, and academic experts to devise a legislative framework to govern the use of AI in high-risk settings like law-enforcement, healthcare, and job recruitment. This move comes in as an aftermath of a discussion paper titled ‘Safe and Responsible AI in Australia’ published by the Australian Government on June 1, 2023, that sought to advise steps Australia could take to alleviate potential AI risks.

As reported, the body will be primarily tasked with answering what high-risk is; whether a new AI Act should be created, or whether the current law should be amended. Notably the government is not looking to follow steps taken by the European Union which agreed to ban unacceptable risk posing AI uses.

FTC ASKS AI MODEL-AS-A-SERVICE COMPANIES TO UPHOLD PRIVACY COMMITMENTS

The Federal Trade Commission (FTC) is cautioning Model-as-a-Service AI companies to prioritize user privacy and confidentiality commitments. Acknowledging the data-intensive nature of AI development, the FTC emphasizes the potential conflict between companies’ data appetite and privacy obligations. Violations including unauthorized data use and misleading practices may lead to enforcement actions. The FTC warns that failing to disclose material facts or misusing data can not only compromise user privacy but also pose risks to fair competition, potentially violating both antitrust and consumer protection laws. The advisory reinforces that AI companies are not exempt from existing legal frameworks.

DRAFT BILL INTRODUCED IN THE US CONGRESS FOR AI RISK MANAGEMENT AT FEDERAL LEVEL

On January 11, 2024, a group of Congress members introduced the draft bill for the “Federal Artificial Intelligence Risk Management Act”. The Bill has proposed to require the US federal agencies to adopt the “Artificial Intelligence Risk Management Framework”, developed by the National Institute of Standards and Technology (NIST) under the US Department of Commerce, that was designed to be voluntary for the governments/businesses around the world to adopt. The Bill has suggested the creation of new reporting requirements to ensure that agencies comply with the framework. It has further directed certain agencies to extend support and guidance on the said framework. It has put forth that NIST must complete a study to review the existing standards to test, evaluate, verify, and validate AI acquisitions.

U.S. REPRESENTATIVES INTRODUCE AI FOUNDATION MODEL TRANSPARENCY ACT FOR ENHANCED ACCOUNTABILITY

U.S. Representatives Anna Eshoo and Don Beyer have introduced the AI Foundation Model Transparency Act to address AI transparency concerns. The proposed legislation focuses on foundation models with over one billion parameters, applicable in various contexts. The Federal Trade Commission (FTC) would set standards, mandating covered entities to disclose details about AI foundation models. The Act aims to mitigate copyright infringement risks and potential harms from biased outputs. The FTC will enforce regulations, covering entities exceeding monthly use or output thresholds. The legislation sets a two-year timeframe for FTC reporting to Congress, marking a crucial step toward transparency in AI development.

Authors: Keshav Singh Rathore, Vanshika Mehra, Astha Singh, Shruti Gupta.